As a hobby project, I have been working on a VST plugin lately. For people who are unaware of what a VST plug-in is (I assume this is most of the people), it’s basically an audio effect or instrument used with DAWs (digital audio workstations). To simplify the concept even further, it’s a piece of software that either creates or modifies an audio or MIDI signal.

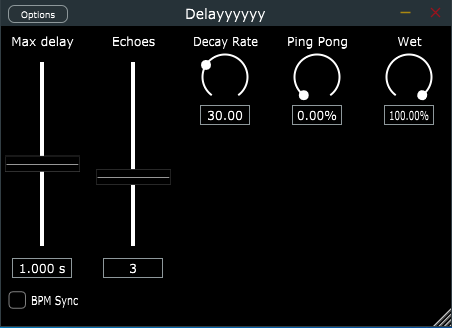

The plugin I’ve been working on for the past few months is an audio delay/echo plugin. It takes an audio signal and repeats it a given amount of times with some certain maximum delay value. It also has few simple options: decay time for deciding how quickly the repeated signal levels decay, and a “ping pong” to alternate the left and right sides of the signal.

Now that I’ve briefly explained what the project is about, I can talk about my latest addition to the plugin: BPM synced delay (basically a delay that is synced to the rhythm of the music). I’ll admit, this may feel a bit like jumping into a moving train when there’s existing code that hasn’t been explained in previous blog posts, but I’ll try my best to keep this simple & understandable. Here’s a link to the PR that contains the BPM sync feature I’ll be talking about in this blog post.

There are changes to two classes, PluginEditor and PluginProcessor. The former is responsible for the UI, and the latter for the actual signal processing.

In the PluginEditor changes were quite simple. I added a BPM sync check box that toggles the visibility of the synced and unsynced delay sliders. I actually had most of the stuff already in place, just commented out (commenting out code is definitely the best method of version control, trust me on this, I’ve had “senior” in my title for three whole months /s). Checkbox’ value is also used in the PluginProcessor side to see if the delay should be synced or not. I’m using CustomSliders to have a custom look and feel on the sliders, but everything should work on a regular slider class equally well (or even better).

There are also some UI gymnastics going on to get everything aligned correctly. The UI should be resizable (kinda), which causes its own headaches there. Possibly the most interesting thing is the custom textFromValueFunction that allows showing musical time signatures instead of raw slider values in the slider value box. Besides these, I also had to inherit the Button listener to be able to update UI and delay parameters whenever the user would click the button. There are more elegant ways to do this, but I think for two lines of code in a hobby project this is a sufficient solution.

Simple so far? Well, things should get a bit more interesting (i.e. complicated) in PluginProcessor. First of all, I had to create parameters for the synced delay toggle and synced delay amount to ValueTree. ValueTree is a class that, you guessed it, stores different values and helps handling them.

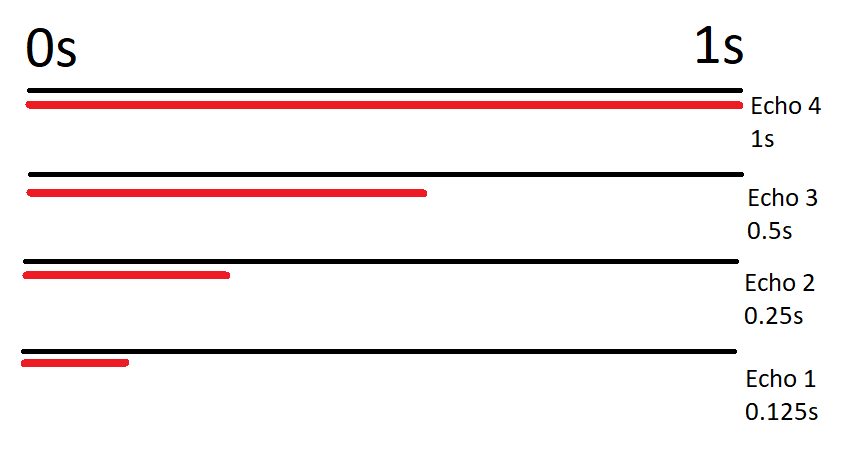

However, maybe the most important functional changes in the PR are to the setDelayBufferParams-function, and that could definitely use some explanation. The delay in this plugin works by creating a vector of size N, where N is the number of echos that we hear back. This vector is then filled with ring buffers, and these buffers have read and write pointers that are offset by a certain amount from each other. There’s a picture after this, and I hope that it’ll explain the idea better than two chapters of words and poorly thought-out mathematical formulas.

The offset between read and write is calculated from the maximum delay time X and echo amount Y user set from the UI. The first buffer gets a value of X/(2(Y-1)), second X/(2(Y-2)), and so on, until (2(Y-n)=0X. This creates an interesting (yeah for sure) non-linear, yet fairly symmetrical delay effect. Please note that the first echo buffer has the smallest offset between read and write pointers.

ANYWAY, after this offset is calculated, the write pointer is set to this value while the read pointer is set to zero. When there’s an audio signal coming in, there’s first a read performed on the buffer to obtain the possible previous, delayed signal. Then the incoming signal with possible ping pong and decay applied is written to the buffer. Finally, the earlier read value is added to the incoming signal. This is then repeated for all the buffers. Since each buffer has a different, bigger than zero offset between write and read pointers, the audio signal gets repeated at different intervals, creating a delay effect. Wowzers!

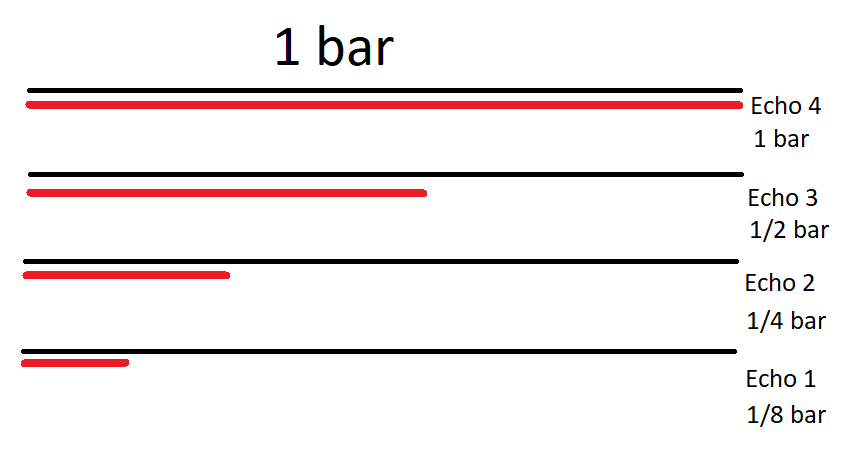

Now, how does this idea work in a rhythmically synced world? Well, time for a little music theory for a change. 97% of the modern western music is in 4/4 time signature, meaning that there are four kick drum hits in a bar, and music is constructed of building blocks with a size of four bars (gross oversimplification, but I don’t want to end up neck-deep in music theory because I’ll explain it wrong). So in a track with a BPM of 120 there are 120 kick drum hits in a minute, and 30 bars in a minute.

This gets a bit subjective from here, but I personally like synced delays to be divisions and multiples of two from the previously calculated value, starting from 1 bar, to create a nice rhythm that sounds good on most music. This means that the delay gets values like 1/2, 1/4, 1/8, and 1/16 when going shorter than one bar, and values like 2, 4, 8 & 16 bars when lasting longer than one bar. When we know the beats-per-minute, or BPM, it becomes fairly trivial to calculate the write and read pointer offsets that are rhythmically synced. Instead of using the user set value for the maximum delay, we calculate the time for a certain amount of beats in a certain BPM.

Hopefully, you’ve understood what I’ve tried to say with this. To make this code change slightly more confusing, there are some optimization changes thrown in there as well. Basically, earlier I had a static 10-second size for the delay buffers. However, with synced delays, this may not be enough, and for the shorter un-synced delays, this will be way too much. So I set the buffer’s size to be equal to the write pointer offset because there is no point in having the buffer be longer than that. The ring buffer will rotate more often, but that isn’t really an issue because old values are not needed.

And that’s the process of how I did the BPM synced delays to my VST plugin. The theory is quite simple once you get the idea of how I implemented the delay buffers, after that it’s just a matter of modifying the write pointer offset. The rhythm is still hardcoded to 120 BPM, but it’ll be fixed in the next PR. There’s also one bug in this implementation that I’ve fixed on my local branch already, but I’ll leave details on that as a cliffhanger to my next blog post.